TL;DR

- RAG adds a retrieval step so answers are grounded in your content, reducing hallucinations and keeping brand tone intact.

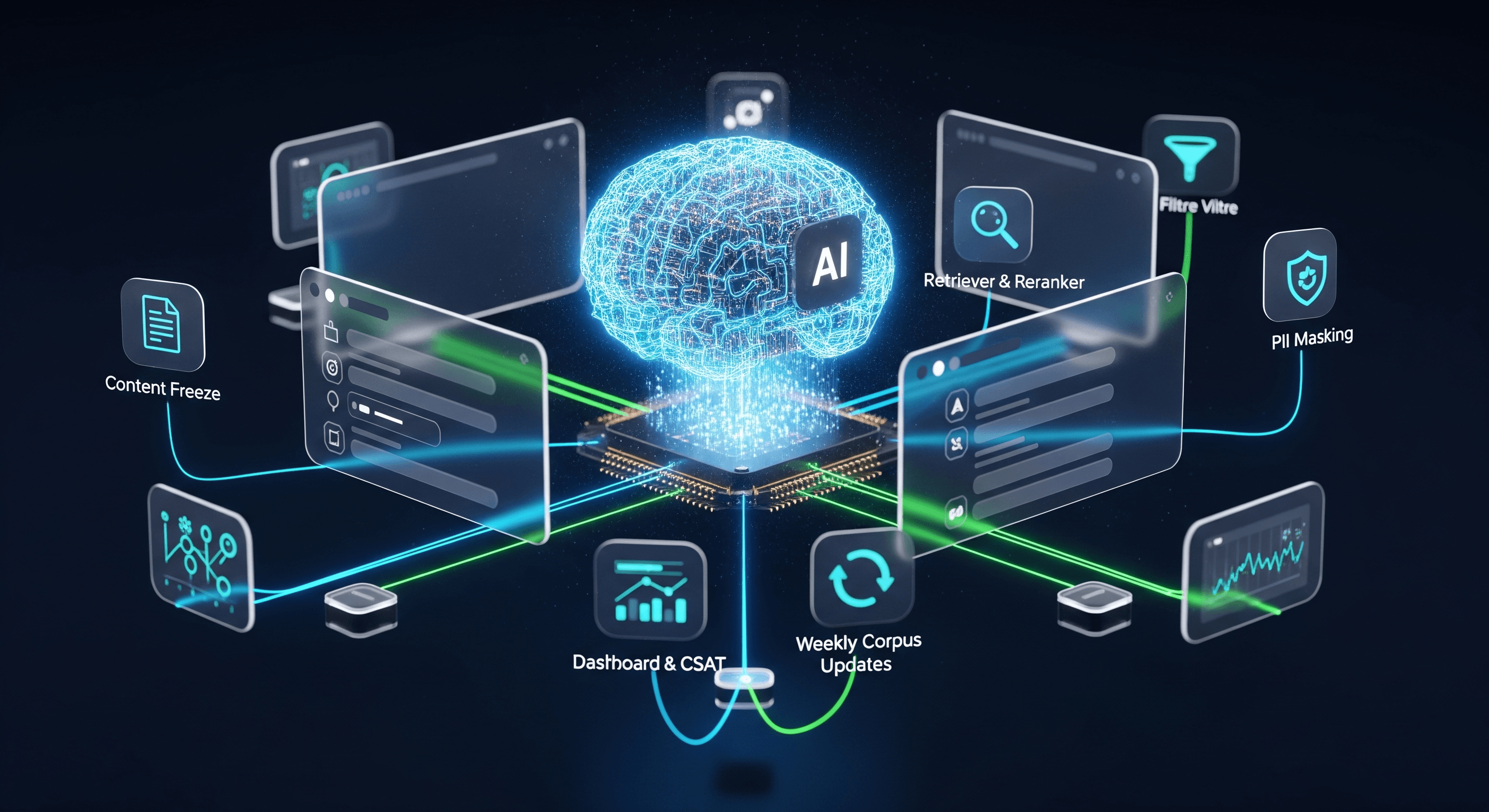

- Treat RAG like a data product: clean sources, sensible chunking, hybrid retrieval + reranking, and an eval harness.

- Control costs/latency with caching, model cascades, output limits, and right-sizing context windows.

- Build privacy in: restrict sources, mask/avoid PII, log safely, and set deletion/retention policies.

- Ship with a go-live checklist and monitoring for recall, faithfulness, latency, and user satisfaction.

If you’ve trialled generic chat on your site, you’ll know the pain: confident but wrong answers, no citations, and rising token bills. Retrieval-augmented generation (RAG) fixes this by letting an LLM answer from your approved content—site pages, PDFs, policies, Notion—so replies are accurate, auditable, and on-brand.

“RAG augments a chat model by adding an information-retrieval step so answers incorporate your enterprise content—and can even be constrained to it.”

— Microsoft Azure docs

RAG in Plain English#

RAG is a simple loop: (1) turn a user query into one or more search queries; (2) retrieve top-K passages from your vetted index; (3) feed those passages plus the question into a prompt; (4) the model drafts an answer with citations; (5) log, evaluate, and improve. Think of it as a smart, read-only knowledge layer in front of your content.

Data Pipeline: Sources, Cleaning, Chunking, Indexing#

Start with the sources that already explain your product: website content, help centre, PDFs, playbooks, Notion/Confluence docs, and selected emails or tickets (redacted). Keep it boring and consistent—good RAG is 70% content hygiene.

- Extraction: crawl public pages; fetch PDFs and knowledge docs via API; store provenance (URL, title, updatedAt).

- Normalise: strip boilerplate, fix headings, convert tables, and dedupe near-identical pages.

- Chunking: begin with fixed token windows plus light overlap to preserve context (e.g., 512 tokens with 50–100 overlap as a reproducible baseline). :contentReference[oaicite:1]{index=1}

- Alternatives: evaluate page-level or semantic/hierarchical chunking; some datasets score better with page-level units. :contentReference[oaicite:2]{index=2}

- Indexing: use hybrid search (BM25 + dense vectors) and add a reranker for precision on ambiguous queries.

- Versioning: sync/invalidate on content changes; keep a content hash so stale chunks don’t linger.

Retrieval Quality: Hybrid Search, Reranking, K, and Context#

Poor retrieval equals elegant nonsense. Your goal is high recall without flooding the model with irrelevant text.

- Hybrid first: lexical (BM25) catches exact terms; dense vectors capture semantics—combine both, then rerank.

- Tune K + window size: start K=8–12; rerank to top 3–6; keep context ≤ model’s efficient window.

- Field boosts: titles, H1s, and headings deserve higher weight than boilerplate.

- Filters: restrict by document type, freshness, locale, or product area to cut noise.

- Rerankers: apply a cross-encoder to reorder candidates before generation for better precision.

Reality check

Chunking and retrieval strategies are data-dependent—baseline with fixed windows, but validate whether page-level or semantic chunks lift recall/precision on *your* corpus. :contentReference[oaicite:3]{index=3}

Evals & Monitoring: Build a Lightweight Harness#

Evaluation should be continuous and cheap to run. Test the retriever and the generator separately, then together. Use a mix of golden Q&A, synthetic variants, and human spot-checks.

- Retriever metrics: Recall@K, Precision@K, Hit@K, NDCG—especially recall for coverage. :contentReference[oaicite:4]{index=4}

- Generation metrics: faithfulness/groundedness (is the answer supported by retrieved text?), response relevance, and citation coverage. :contentReference[oaicite:5]{index=5}

- Tooling: frameworks like RAGAS provide context precision/recall and faithfulness out of the box. :contentReference[oaicite:6]{index=6}

- Dashboards: track answer quality, retrieval errors, latency p50/p95, token usage, and user feedback (thumbs/CSAT).

{

"eval_case": {

"question": "What is our warranty period for Pro Plan?",

"gold_context": ["...Pro Plan has a 24-month warranty..."] ,

"gold_answer": "24 months",

"checks": ["context_recall", "faithfulness", "response_relevance"]

}

}Cost & Latency Controls (That Actually Work)#

RAG can be fast and affordable if you design for it. Focus on avoiding re-paying for repeated prompts and right-sizing outputs.

- Prompt caching & reuse: cache system and retrieval scaffolds; reuse across similar questions. Case studies report large savings when combined with batching for non-urgent jobs. :contentReference[oaicite:7]{index=7}

- Cap output tokens: ask for concise answers with bullets; token limits meaningfully cut latency. :contentReference[oaicite:8]{index=8}

- Model cascade: try small/fast model first; escalate to larger model on low confidence.

- Context discipline: keep only the top reranked passages; avoid dumping whole pages.

- Edge/semantic caching: cache Q→A for high-frequency intents; invalidate when sources change. :contentReference[oaicite:9]{index=9}

Security, Privacy & PII Handling#

Trust is earned. Scope your bot to approved content, minimise data collection, and ensure you can honour user rights.

- Source allow-lists: retrieval only from curated indices; no open web.

- PII control: mask/detect PII pre-prompt; redact transcripts; consider local LLMs for masking in sensitive workflows. :contentReference[oaicite:10]{index=10}

- Retention & deletion: set transcript TTLs; support user deletion; separate analytics from raw content.

- Compliance posture: document purposes, legal bases, and access controls; EU guidance highlights privacy risks in LLM systems—treat RAG data as regulated. :contentReference[oaicite:11]{index=11}

Go-Live Checklist#

- Content freeze + index snapshot; provenance stored (URL, title, updatedAt, checksum).

- Chunking baseline chosen and documented; alternative strategy under test.

- Hybrid search + reranker enabled; filters set (locale/product/version).

- Eval harness green on recall, faithfulness, relevance; sampling plan for humans.

- Prompt & output caps set; PII masking active; logs scrubbed and rotated.

- Dashboards wired: latency p50/p95, cost per answer, failure modes, CSAT.

- Launch playbook: roll-out to % of traffic; feedback loop in support/CRM.

- Post-launch: weekly corpus update and broken-citation review.

How CodeKodex Helps You Ship Reliable RAG (Without Runaway Costs)#

We slot into your stack as a pragmatic partner. Our focus: retrieval quality, evals you can trust, and a bill that doesn’t creep.

- Content & index hygiene: source audit, dedupe, chunking trials (fixed vs page-level), and hybrid retrieval with reranking.

- Eval harness: recall/precision for retriever; faithfulness/relevance for answers; dashboards with p50/p95 latency and cost per answer.

- Guardrails: source allow-lists, citation requirements, safe fallbacks, and human handoff for low-confidence queries.

- Cost levers: prompt/context caching, model cascades, and concise answer patterns wired from day one.

Conclusion: Treat RAG Like a Product, Not a Plugin#

RAG for websites works when content is clean, retrieval is strong, and quality is measured continuously. Do the unglamorous data work, adopt a simple eval harness, and keep a tight grip on tokens. You’ll earn accurate answers, lower support load, and a better customer experience—without surprising invoices.

Frequently Asked Questions#

Use a reproducible baseline (e.g., ~512 tokens with 50–100 overlap), then test alternatives like page-level or semantic chunking against your eval set. Different corpora favour different strategies. :contentReference[oaicite:12]{index=12}

Track faithfulness/groundedness (is each claim supported by retrieved text?) and citation coverage, not just answer relevance. Tools like RAGAS or similar provide these metrics. :contentReference[oaicite:13]{index=13}

Yes—lexical (BM25) catches exact terms and dense vectors catch semantics; add a reranker for precision on tricky queries. This combo consistently improves retrieval quality in production reports.

Cache prompts and contexts, cap output tokens, right-size K and context, and use model cascades. Real-world reports show significant savings when caching is applied smartly. :contentReference[oaicite:14]{index=14}

Restrict the bot to approved sources, mask/avoid PII, set retention/deletion policies, and document access controls in line with EU privacy guidance. :contentReference[oaicite:15]{index=15}

Next Steps#

Share your top 50 FAQs and links to your docs (site, PDFs, Notion). We’ll return a short RAG readiness note (sources, chunking starting point, retrieval plan) plus an eval checklist you can plug into CI. When you’re ready, we’ll help you pilot on a single high-impact page and expand safely.